Background and Strategy

At this point in the integration effort, with all the development work finished and validated, teams will feel a strong urge to turn off their computers, pour themselves a hot tea (or something stronger), take a nap, and generally never think about this project again.

But that would be a mistake.

Now -- with all the details of the integration still fresh in team member's minds -- is the correct time to perform a final sweep of objects, value sets, lookup tables, terms, and any other assets created over the course of the effort. The idea is to (1) delete or archive any assets that will no longer be needed; and (2) complete any unfinished documentation or other housekeeping tasks that were put off during the hustle and bustle of a development sprint.

Every minute of time spent on this activity now will save many times that in the future, when, for example, the rationale for a decision needs to be forensically excavated from an email or notebook rather than simply remembered.

Finally, the exercise of chronologically reviewing and documenting a sequence of logic can be an excellent opportunity to identify inconsistencies and omissions, especially in the hand-offs between major set pieces of logic. Some of the most subtle, confounding bugs -- rarely occurring and/or difficult to trace back to their origins when experienced in the wild -- can be found "for free" in this manner. Again, time is of the essence, so that the entire picture of the integration can be kept present in the reviewer's active memory.

Detailed Implementation Guidance

-

Make sure that any data diagnostic objects that are not archived are set to Prevent Passive ELT so that they will not be included in every ELT originating in the Source Data Layer using Run Downstream.

-

The Object Description field should be populated for all objects, including those in the Source Data Layer. Probably the most useful information to capture here is the grain size of the underlying data; simply completed a single sentence starting with "Contains one record per ..." will greatly aid future users trying to get a handle on what is happening in that object.

-

Don't be afraid to create new terms for new fields that are idiosyncratic to a particular source. Once created, a term will follow the relevant field or fields around from object to object, through the data journey, including out to the Case Review screen. Under these circumstances -- that is, when defining a concept that might be local to a particular source system but perhaps not more broadly applicable to other source systems, or to the consolidated data in the natural object layer and beyond -- it is appropriate to use the source system's Namespace in the term configuration.

-

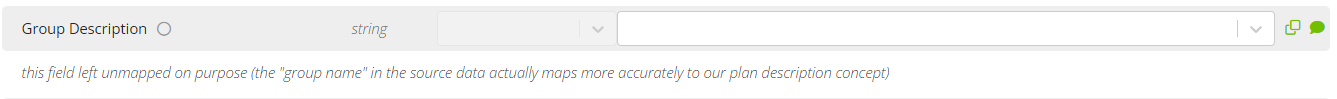

A less formal documentation mechanism are comments, which should be used early and often, especially in Semantic Mapping objects. Some of the most valuable comments might be on mappings that were not made, e.g.,