Background and Strategy

The Natural Object Layer serves as the foundation for a large number of other objects and measures. Once the appropriate Natural objects have been updated as part of a new source integration, the next step should be to refresh the downstream balance of the data model, and to generate measure results that will be useful for the remaining validation tasks.

Notably, the Ursa Health Core Data Model includes the URSA-CORE Data Diagnostics report, which contains a number of measures designed to probe the data integrity of the data model. These measures, when filtered to the Source ID associated with the current integration effort (or when split on that same Source ID), can quickly identify suspicious patterns of data that the simpler object-level validation checks discussed earlier are not able to detect.

For example, measure URSA-CORE-901, "Claims without Contemporaneous Membership", identifies claims with service dates outside the coverage period of a patient's plan membership, a pattern suggesting that the membership data may be incomplete. To review the full set of measures on the URSA-CORE Data Diagnostics report, see Measures.

Other reports -- containing the traditional clinical or operational performance measures -- can also be useful for identifying problems with new integration logic or with the newly integrated source data itself. For example, a PMPM measure, filtered or split to isolate the newly integrated source data, can often be eyeballed to know whether the results are at least generally correct.

There might also be scenarios in which something in the source data triggers an error in the ELT preceding the generation of report results. For example, certain types of unhandled duplicates might survive the validation in the Natural Object Layer but cause a primary key violation in some later part of the the data journey.

All suspicious report results or ELT errors should be investigated during this task. A good strategy is to use Case Review to identify a representative patient for each suspicious pattern, and review that patient's data in the Source Data Layer. To the extent the same suspicous pattern is found there, the issue can perhaps be attributed to the source data itself rather than a bug in the integration logic (though it is often still necessary in these cases to amend the integration logic to better handle that pattern). Alternatively, if the source data look broadly correct, the reviewer can start stepping downstream, object-by-object, using Case Review to find where the first instance of the suspicious pattern appears, and then scrutinize the logic in that object. (This exercise might even inspire the creation of a new Validation Rule designed to pick up future instances of this kind of issue.)

After any corrections are made, the report should be rerun to confirm they had the desired effect.

Detailed Implementation Guidance

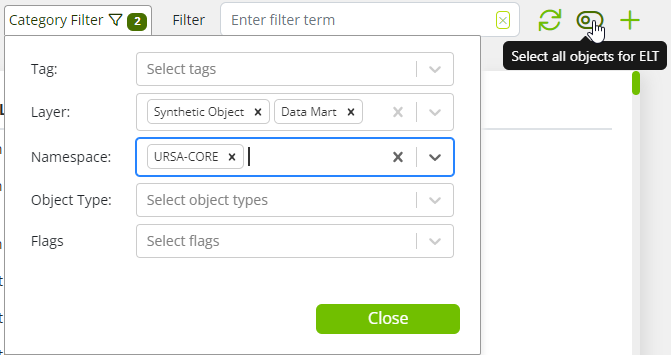

- In a typical Ursa Studio implementation, an ELT involving the Ursa Health Core Data Model objects downstream of the Natural objects can be set up easily using the Category Filter in the Object Workshop zone: select Synthetic Object and Data Mart in the Layers control, and URSA-CORE in the Namespace control, then click the Select all objects for ELT slider.

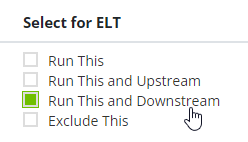

- If an integration only hit a small number of Natural objects, a more targeted ELT can be set up by selecting the Run This and Downstream checkbox associated with each of the affected Natural objects.

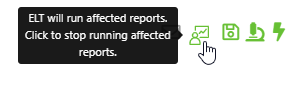

- Once an ELT preview has been generated (for example, by one of the methods described immediately above), any reports touched by any object included in that ELT can be scheduled to run upon successful completion of the ELT by clicking the Run affected reports icon in the ELT Progress blade.