Overview

Data stored in delimited, fixed-width, or Excel files can be imported into Ursa Studio using the import object type. This object type enables users to select a file from a bucket or storage container, specify its parameters, and import it into a database table. If the table already exists in Ursa Studio, the user can either overwrite the existing file or append to the table. While appending, the user may denote a primary key or keys for the table, and any duplicate keys will be overwritten with the new data. If no keys are supplied for an append import, the new data will simply be appended without any change to the original data.

To create an import object users should navigate to the Object Workshop home screen and click the plus sign icon in the upper right corner. In the Create New Object screen, select the Import tile. In the Import object screen, update the parameters listed in the panels detailed below. When the import object's parameters have been specified, select the upload file icon in the upper right corner of the screen. The browser then redirects back to the Object Workshop home screen where the object will be running in the ELT Progress blade.

Naming

.png)

Object Namespace: Namespaces contain different information about data assets depending on the asset type, and in the case of objects, the associated data model layer. An import object's namespace typically describes the data’s source, e.g., BCBS, Humana; etc.

Object Name: The object name is how the object will be referenced throughout Ursa Studio. The convention used by the Ursa Health team for naming import objects has three parts, [“Source”]+[Namespace]+[File Description]. First, the word “Source” is used to indicate the object is associated with the Source Data layer of the data model. Second, the object’s namespace is included. Third, a brief description of the file is included. “Source ACMEHEALTH-BCBS Membership” is an example of how most import objects are named.

Object Description: The object's description provides a brief explanation on the contents of the object. The convention used by the Ursa Health team is to describe the grainsize of the file being imported in this field, e.g., “One row per member.” However, it is common for object descriptions to contain more than this minimum information.

Table Name: This field captures the name of the table that will be created in the import process. The Ursa Health team uses a convention for table names similar to the one used for object names. However, for table names this convention potentially has four parts, [“sd”]+[namespace]+[file description]+[#]. First, the token “sd” is used to indicate the object is associated with the Source Data layer of the data model. Second, the object’s namespace is included. Third, a brief description of the file is included. Forth, if the object represents one file in a series of files being imported, a number is appended. “sd_acmehealth_cms_membership” is an example of the structure used to name most import object tables.

Tags: Tags are general purpose labels that can be applied to a variety of data assets in Ursa Studio for identification and categorization. For example, the Ursa Health team uses an "In Development" tag to identify objects and measures that are currently under development.

Configuration

.png)

Bucket Name or Storage Container Name: This field enables users to select the import file’s bucket or storage container.

Impute Data Effective Date from Filename: When this option is selected, the file's data effective date, a required parameter for every file imported, will be automatically imputed from the filename. The following two parameters are for specifying the details of the data effective date's format and location in the filename. Note this option is only available for cloud-based deployments.

Date Format Mask: This field is for specifying the date format mask of the data effective date to be imputed from import file's name. The mask can consist of the characters Y, M, D, period, underscore, and hyphen. By default, the date format mask is assumed to be YYYYMMDD. When the file's date format mask is YYYYMMDD, this field can be left blank.

Mask Start Character: This option enables the specification of the (1-indexed) start character of the date format mask. By default, the date is assumed to be at the end of the filename. When the date is at the end of the filename, this field can be left blank. If the Mask Start Character is negative, it will be presumed to be counting backwards from the end of the filename.

Filename Filter Prefix: This field allows users to limit the items in the filename dropdown by entering in a prefix to search within. If multiple prefixes are entered, files that match any of the prefixes will be returned. This is especially useful if there are more than the limit of files in the storage container, as only the limit of files can be rendered in the filename dropdown per filter prefix.

Filename Filter Result Limit: This field enables the configuration of the max number of files returned by the prefix filter. If multiple prefixes are entered, the limit will apply to each prefix. Default 1000.

Filename: This field enables the selection of the appropriate file from this dropdown. If an xls or xlsx file with multiple sheets is selected, only the first sheet will be imported; users can utilize a preprocessing script if they need to import sheets other than the first. If the Impute Data Effective Date from Filename option is selected, a generic, masked filename can be chosen as the file to be imported. By selecting the "Latest file matching [file_name_mask]" option, the file to be imported will be dynamically determined at the time of import.

Is Zipped: This option specifies whether the file to be imported is contained in a zip or gzip file. When selected, Ursa Studio will unzip the file during the import process. If the zip file is encrypted, a field for entering the file’s password is displayed. The password is not saved in the database for subsequent re-use. Note that the encryption method is assumed to be standard zip or gzip encryption, not AES-256.

Preprocessing Script: Users can select a preprocessing script from a dropdown that includes some built-in scripts, such as for a Claims Reduction File (CRF), along with any of the bespoke models that have been created in the Advanced Analytics zone. These scripts are meant to transform the selected file to CSV or related format, after which the rest of the import workflow will function per usual. If the bespoke model requires parameters, those parameter will be prompted for via input fields that will appear when the model is selected.

Data Effective Date: The data effective date is the date the data was made available for use, e.g., if a monthly membership file for October was made available on November 5th, the data effective date would be November 5th. When the import file's data effective date is not imputed from the file's name, it can be manually entered in this field. If this field is left blank, the date of the import will be considered the data effective date. Note the value in this field is stored on a per-import basis and not as a single value for the object itself and is available as metadata via the semantic mapping object.

Data Covered Start Date: The date representing the beginning of the period covered by the file being imported, e.g., a monthly membership file for October would have a data covered start date of October 1st. Note the value in this field is stored on a per-import basis and not as a single value for the object itself and is available as metadata via the semantic mapping object.

Data Covered End Date: The date representing the end of the period covered by the file being imported, e.g., a monthly membership file for October would have a data covered end date of October 31st. Note the value in this field is stored on a per-import basis and not as a single value for the object itself and is available as metadata via the semantic mapping object.

Delimiter: By default, Ursa Studio assumes a csv file is being imported, and if this is true, nothing needs to be entered in this field. However, the default value can be overwritten with the appropriate delimiter as needed.

Tab-Delimited: When selected, this option specifies the file being imported is tab delimited.

Fixed-Width: When selected, this option specifies a fixed-width file is being imported. In this case, a data dictionary must also be supplied. The data dictionary should be a csv file in either a two-column or a three-column variant. The two-column variant is in the form "column_name, column_width," with the assumption that all the columns are adjacent. The three column variant is in the form "column_name, column_start_index, column_end_index," where the start index is inclusive.

Quote Character: By default, Ursa Studio assumes a csv file is being imported, and if this is true, nothing needs to be entered in this field. However, the default value can be overwritten with the appropriate quote character as needed.

Has Header: Selected by default, this option specifies the file being imported has column headers in its first row. When a header is present, the import tool will generate its column names from the header text. If no header is present, a user must attach a data dictionary with a single column containing field names.

Following the initial file import, the Configuration panel will have an additional option:

Upon Reload: This option providers users two options for specifying how subsequent file imports will be handled. First, users can select to overwrite the existing table, which instructs the object to perform a full refresh when each new file is imported. Second, users can select to append to the existing table, which instructs the object to update existing rows and add new ones with each file imported. When this second option is selected, the following additional option is displayed:

Overwrite upon match of: This option enables users to select which key field(s) should be used to update existing rows in the table.

Prevent Duplicate Import: If this option is selected in the context of a range import, any filename that has already been imported since the most recent prior overwrite will be removed from the range of files to be imported. If the option is selected outside the context of a range import, attempts to append a file with the same filename as one that has already been imported since the most recent prior overwrite will result in an ELT error.

When import objects with "Prevent Duplicate Imports" are added to an ETL under the "Run This and Downstream" instruction, these objects will be skipped if there are no actionable files to import, along with any downstream objects that are in the ELT on account of being in their downstream.

Object Metadata

.png)

Source: Select the option that represents the file’s source, e.g., if the file was provided by Humana this source might be “Humana”. To add a new source option, select the “Manage Sources” button and add a new source in Integration Manager. Note the value in this field is stored on a per-import basis and not as a single value for the object itself. This metadata is available for downstream transformation via the semantic mapping object.

Is Visible to Layers: This option enables users to define the data model layers that will be able to view and access the import object. By default, the “Use Layer Default” option is checked, which is the sensible choice. Unless, a file is being imported for an unusual purpose, leave this option as is.

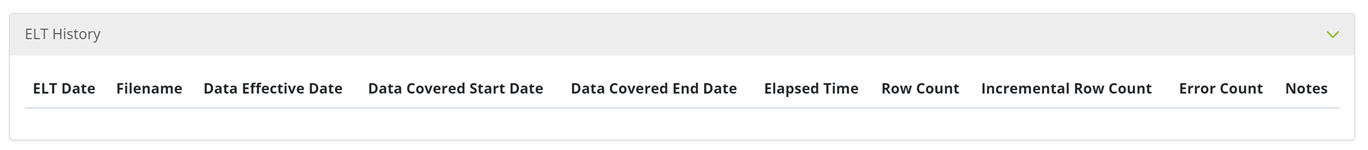

ELT History

This panel captures the following metadata specific to each file imported into the object:

- ELT Date

- Filename

- Data Effective Date

- Data Covered Start Date

- Data Covered End Date

- Elapsed Time

- Row Count

- Incremental Row Count

- Error Count

- Notes

Revision History

As its name suggests, this panel contains a timestamped change log that keeps track of all revisions to the object. Users can revert back to a previous version of the object by selecting the "Recover" button to the right of the timestamp and comment. When selected, users are prompted with two options. First, the recovered version of the object can be created as a new object, which will leave the current version unchanged. Second, the recovered version of the object can replace the existing version of the object. When this option is selected, the existing version of the object will receive a record in the change log and can be recovered in the future if desired.

Cloud-Based Import

For implementations in the cloud, the preferred method for importing source data files is to import the file from a designated bucket or storage container, instead of directly from the user’s hard drive. In these cases, the import screen automatically discovers the available storage containers and allows users to pick from among the files it sees in them.

Due to limitations in the AWS and Azure APIs, the Import object screen is only ever able to list 1000 files within a bucket. For buckets containing over 1000 files, users have had to enter the full name of the file to be imported, which has been problematic in the circumstances where the users do not know the exact name of the file and do not have personal access to the bucket to verify the filename. Now, when Ursa Studio recognizes that the filename list is being truncated to 1000 by the AWS or Azure API, it will provide an optional extra control in the Import object screen by which users can enter a prefix string to act as a filter, and the list of available files in the filename dropdown will be limited to only those files that start with the prefix string. If the files exist in a subdirectory, the prefix string should be the subdirectory name, and not the beginning of the actual filename. Range imports and date-imputation imports will throw an error if even considering the filename filter prefix there are too many files returned by the list-bucket query to responsibly frame the list of files under consideration.

Multiple files, which are contiguous within a single storage container, can be imported at the same time. Users can choose the start and end files of the range to be imported, and Ursa Studio will import them one after the other, in alphabetical order, appending each subsequent file and applying the chosen append key to de-duplicate keys. The original file can still be set to either overwrite or append if the table already exists.

When range imports are activated, the range import will normally iteratively import every file, starting with the chosen filename and ending with the range end filename, in alphabetical order. However, in the circumstance where there are filenames that are between the start of the range and the end of the range which must be skipped, those files can now be screened out by setting a case-sensitive secondary filter for the range. If the user chooses the "unbounded" option from the range end filename in the import object screen, Ursa Studio will import all files matching the filename filter prefix that are alphabetically after the range start filename.

Import objects within cloud environments will display a “File Preview” button, which lets users see the first ten lines of the file, as well as the file's size, type, and last-updated date. The access of this information will be logged in the PHI audit log.

Automated Import

Every import needs to know the data effective date of the file to be imported, which is typically specified via an input in the Import Object screen. For cloud-based deployments it's possible to set Ursa Studio up to automatically impute the data effective date based on the filename, using the "Impute Data Effective Date from Filename" checkbox. By default, the date format mask is assumed to be YYYYMMDD and is also assumed to be at the end of the filename. Both of these defaults can be overridden; the user can enter any mask consisting of the characters Y, M, D, period, underscore, and hyphen. If the date string is not at the end of the filename, the user can specify the (1-indexed) start character of the date string. This is especially useful if there are multiple numeric strings in the file name.

Imputing the data effective date from the filename unlocks the possibility of running the import object via the Object Workshop dashboard screen, as well as automating the import with via a Saved ELT.

Imputing the data effective date from the filename also unlocks the possibility of choosing a generic, masked filename as the file to be imported. For example, if there is an existing file named "my_import_20200101.csv" in the list of files, users will also see an option to import "Latest file matching my_import_YYYYMMDD.csv". If this option is selected, the actual file to be imported will be dynamically determined at the time of import. When such a file is imported, Ursa Studio will automatically delete all but the latest three files in the import container that match the filename mask upon successful import, so as to keep the container at a manageable size if an automated process is periodically uploading files. This option can also be used with range imports.

When setting up import objects to impute the data effective date from the filename and to use a mask to dynamically choose the most recent filename in the container matching that masked filename, users might run into the problem that there are characters in the filename which are not part of the date mask but which should nevertheless be allowed to vary from filename to filename while still being considered "matches". An example of this could be if the filenames have not just the date in the filename but a time value as well; these time values should be effectively ignored. To perform these imports, users are now able to enter the character * (asterisk) as a single-character wildcard. For example, they can type my_file_YYYYMMDD******.txt as the storage filename. To facilitate this typing, a clipboard icon has been added alongside the storage container name, which will copy the current filename into the clipboard. Users can then immediately paste the filename into the dropdown and replace the variable characters with asterisk.

While every import needs to know the data effective date of the file to be imported, data effective date is no longer a required field for import objects. If this value is left blank, the date of the import will be considered the data effective date. This now allows for import objects without imputed dates to be run directly from the Object Workshop dashboard, or added to a Saved ELT. If a multi-file range import is set to impute the data effective date from the filename and a mid-range file has an invalid or missing date, the effective date will be set to the date of the import.

Ursa Studio supports a special treatment for imports that need to specify not only the data effective date but also the data coverage start and end dates. If the date format mask is exactly as follows: YYYYMMDD_YYYYMMDD_YYYYMMDD, then Ursa Studio will assume that the first of these dates represents the data effective date, and the other two represent the data coverage start and end dates, respectively.