1. Rationale

For customers with an existing footprint in the Azure cloud, the Ursa Hybrid Cloud is an attractive option that minimizes the implementation time and maintenance burden of the engagement while maintaining a high level of privacy and security. Under this approach, Ursa Health will deploy and maintain Ursa Studio within the same Azure data center as the customer’s preexisting Azure database. Data will flow to Ursa Studio and onward to end-users as appropriate, but no sensitive data will be persisted on the Ursa-managed Azure services. In addition, access control can be managed by Azure, removing the need for the whitelisting of IP addresses which is otherwise typical among Hybrid Cloud solutions.

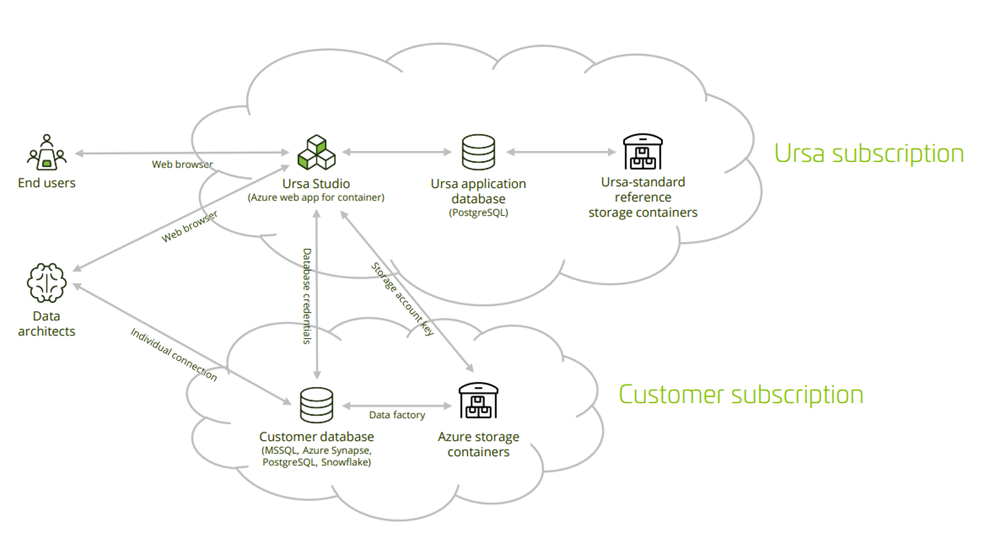

2. Overview

Whether managed either by Ursa Health or by the customer, the Azure deployment for Ursa Studio makes use of five different services, as follows:

• App Service for Containers

• Azure Database for Postgres application database to power the metadata behind Ursa Studio

• Customer database on MSSQL, Postgres, Synapse, or Snowflake

• Storage Account

• Data Factory

In the Within-Azure Hybrid Cloud solution, Ursa Health will deploy and manage the first two of these five services, and the customer will deploy and manage the second three. They can either leverage their preexisting data warehouse or create a new one for the Ursa Health engagement.

This document is not particularly applicable to Snowflake deployments, which do not require or support VPC peering.

3. Diagram

4. Set up Database

The Hybrid Cloud setup is meant to be layered onto a pre-existing Azure database. If no such database is already in use in advance of the Ursa Studio deployment, one will need to be provisioned. Ursa Studio is compatible with Azure Database (MSSQL), Azure Database for PostgreSQL, Synapse, and Snowflake, among other databases. The choice of database, and the recommended size of the database, will depend on the size and scope of the data to be transformed.

By convention, Ursa Studio will do its work by means of a user named ursa, operating with read-write privileges in a schema also named ursa. Any people with individual accounts working in the database can inherit from the ursa_admin role. These conventions are not set in stone, so different user and schema names can be used if desired. When creating the service account password, avoid using the hashtag character or any other character that would require URI escaping. Dash and underscore are both safe.

4.1 Database Commands

# create role ursa_admin;

# create schema ursa;

# create user ursa with encrypted password ‘chooseastrongpasswordhere’;

# grant usage on schema ursa to ursa;

# grant all on schema ursa to ursa;

5. Set up Storage Account and Data Factory

You will want to set up storage containers for flat file inflow to and (if desired) outflow from the database. Notably, Synapse cannot import data directly from your workstation, so you must set up a bucket if you want to import any flat files into Synapse. You can also create a designated output storage container for exports from Ursa Studio. If you do so, be sure to let your Ursa Health implementation team know the name of this output storage container.

Ursa Studio will use Azure Data Factory to move data from the storage account to the database. Create a service principal from cloud shell in PowerShell mode, saving the returned credentials for later use.

az ad sp create-for-rbac -n ursahealth-factory-service-principal --role contributor --scopes /subscriptions/{your-subscription-uuid}/resourceGroups/{your-resource-group-name}

Furthermore, make sure that Microsoft.DataFactory is registered as a resource provider in your subscription. You can verify this in the Resource providers blade of your subscription.

6. Connect Clouds

6.1 Allow Database Connection Access

From within your database resource, set your database to allow access to Azure applications. Be sure to enforce SSL, and mandate minimum TLS of 1.2, in the same tab.

6.2 Share Storage Account and Data Factory Service Principal credentials with Ursa Heath

Let the Ursa Health implementation team know the resultant service principal’s App ID, Password, and Tenant ID, as returned by the Data Factory setup command in step 5. In addition, let them know your subscription UUID, resource group name, storage account name, and a SAS token encompassing the account and container scopes for that storage account. You can alternatively send one of the storage account keys instead of a SAS token.

6.3 Create service account credentials for the customer database

Let the Ursa Health implementation team know the database credentials (including the hostname, port, database name, username and password) of the user you set up in step 4.1. Make sure you have chosen a strong password. Our best practice is to give the service account user read-only access into the necessary source schemas, and read/write access into a dedicated “ursa” schema, into which Ursa Studio will conducts its transformations.